Background: In this series of posts, I’ve been using medical device development (as Regulated by U.S. FDA via CFR 820.30 and international standard IEC62304) as an exemplar for suggesting ways to develop high quality software in regulated (and other high assurance, high economic cost of failure) environments in an agile manner. This series is sponsored, in part, by Rally Software Development, with special thanks to Craig Langenfeld for his contribution.

=====================================

A note on V&V: As we described in earlier posts, while there is no perfect discriminator between verification and validation activities, in agile verification (…providing objective evidence that the design outputs of a particular phase of the software development life cycle meet all of the specified requirements for that phase) occurs primarily and continuously during the course of each iteration. Validation (… confirmation by examination and provision of objective evidence that software specifications conform to user needs and intended uses, and that the particular requirements implemented through software can be consistently fulfilled) typically occurs primarily in the course of special iterations dedicated to this purpose. Generally, the testing of nonfunctional requirements occurs during these special iterations, and it is therefore probably sensible to think of this testing as a primarily a validation activity.

=====================================

The first 90% of the software takes 90% of the development time. The remaining 10% of the code takes up the other 90% of the time.

— Tom Cargill, Bell Labs

In the last post, we described testing the features described in the Product Requirements Document as an important verification activity, which is largely independent of the testing we perform for the individual stories. In this post, we’ll describe the other dimension of product requirements the nonfunctional requirements (which are typically also contained in the PRD) that describe the “ilities” of the subject device or system. If we are not careful, these special “quality requirements” will take up the “other 90%” of our total development time.

In Agile Software Requirements, I described nonfunctional requirements, and their even uglier stepsister, design constraints, as the “URPS” part of our FURPS (Functionality, Usability, Reliability, Performance and Supportability) acronym and noted the following discriminators:

| Functional requirements. |

Express how the system interacts with its users —its inputs, its outputs, the functions and features it provides |

| Nonfunctional requirements |

Criteria used to judge the operation or qualities of a system |

| Design Constraints |

Restrictions on the design of a system, or the process by which a system is developed, but that must be fulfilled to meet technical, business, or contractual obligations |

It can be useful to think about the major categories of NFRs via the “URPS” acronym:

Usability – Includes elements such as expected training times, task times, number of control activities required to accomplish a function, help systems, compliance with usability standards, usability safety features

Reliability – includes such things as availability, mean time between failures (MTBF), mean time to repair (MTTR), accuracy, precision, security, safety and override features

Performance – response time, throughput, capacity, scalability, degradation modes, , resource utilization.

Supportability (Maintainability) – ability of the software to be easily modified to accommodate planned enhancements and repairs

Note: A more complete list of potential nonfunctional requirement considerations can be found in my book, Agile Software Requirements and even on Wikipedia (http://en.wikipedia.org/wiki/Non-functional_requirement.)

Design Constraints can also be particularly relevant in the development of high assurance systems. These can refer to items such as: follow all internal processes per the companies Quality Management System, and use only components which themselves have been validated, adherence to comprehensive safety standards such as IEC 60601-1 (which covers generic safety requirements for medical devices including a list of hazards and their tolerable limit of risk) as well as a potentially long list of other such requirements.

No matter their nature or source, these requirements are just as critical as the functional requirements we’ve described in user stories and features, for if a system isn’t reliable (become unavailable on occasion) or marketable (fails to meet some regulatory requirement) or isn’t as accurate as the patient/user requires, then, agile or not, we will fail just as badly as if we forgot some critical functional requirement.

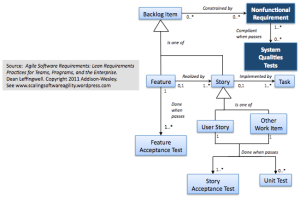

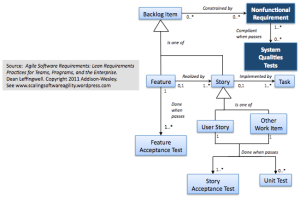

As seen in the requirements metamodel below, we’ve modeled them a little differently than we did features and user stories, which from an agile implementation perspective were all modeled as transient backlog items.

This is because the implementation of features and stories tend to somewhat transient in nature, and (subject to automated testing and verification infrastructure), you can discover-them, implement-them, auto-test-them and “almost-forget-them”.

Such is rarely the case with NFRS as many of these must be revisited at every increment to make sure the system — with all its new features — still meets this set of imposed quality requirements.

Testing Nonfunctional Requirements

To assure that the system works as intended, and is illustrated by the metamodel:

Nonfunctional requirements and systems qualities tests

most identified NFRs must typically (0..*) be associated with some “System Qualities Test” which is used to validate that the system is still in compliance with that specific NFR. (And perhaps some system qualities tests may test multiple NFRS (1..*))

These types of requirements are indeed “special” in the way we need to think about them, for example:

- some can be tested by inspection only. Example: use only components which themselves have been validated

- some must be tested with special harnessing or equipment, and therefore may not be practical in each iteration. Example: application pressure must be accurate to within +/50 millibars across the entire operating range.

- some require continuous reasoning and analysis each time the system behavior changes (at each increment). Example: adhere to IEC 60601-1 device safety standard.

As we can see, the testing of many NFRs is simply not automatable, and therefore some amount of manual NFR testing is left to the validation sprint. If the items can be automated, the teams should surely do so, but either way, comprehensive regression testing (and documentation of results) of these “system qualities” is an integral part of the validation process.

Conclusion

In this post, we’ve described how full regression testing of a systems nonfunctional (quality) requirements is a validation activity typically required at each increment (PSI) boundary, and how some of this effort will likely be manual. To this point, we’ve described most of the testing activities required for building systems of high assurance. In the next post, we’ll take an even broader look at agile testing practices in the context of high assurance development.